RAG vs Traditional Search for Data Center Documentation

Why semantic understanding beats keyword matching for critical data center operations and technical documentation.

Introduction: When Search Failures Cost Millions

A data center engineer needs to troubleshoot a cooling anomaly at 3 AM. They search the documentation portal for "CRAC unit temperature deviation protocol" and get:

- 47 results containing those keywords

- Outdated procedures from 2018

- Conflicting vendor manuals

- Generic best practices that don't apply to their specific equipment

15 minutes of searching. Zero useful answers.

This scenario plays out thousands of times daily across data centers worldwide. Traditional keyword-based search systems weren't designed for the complexity of modern data center operations—they were designed for finding documents, not answers.

Retrieval-Augmented Generation (RAG) fundamentally changes this paradigm by understanding meaning, not just matching words.

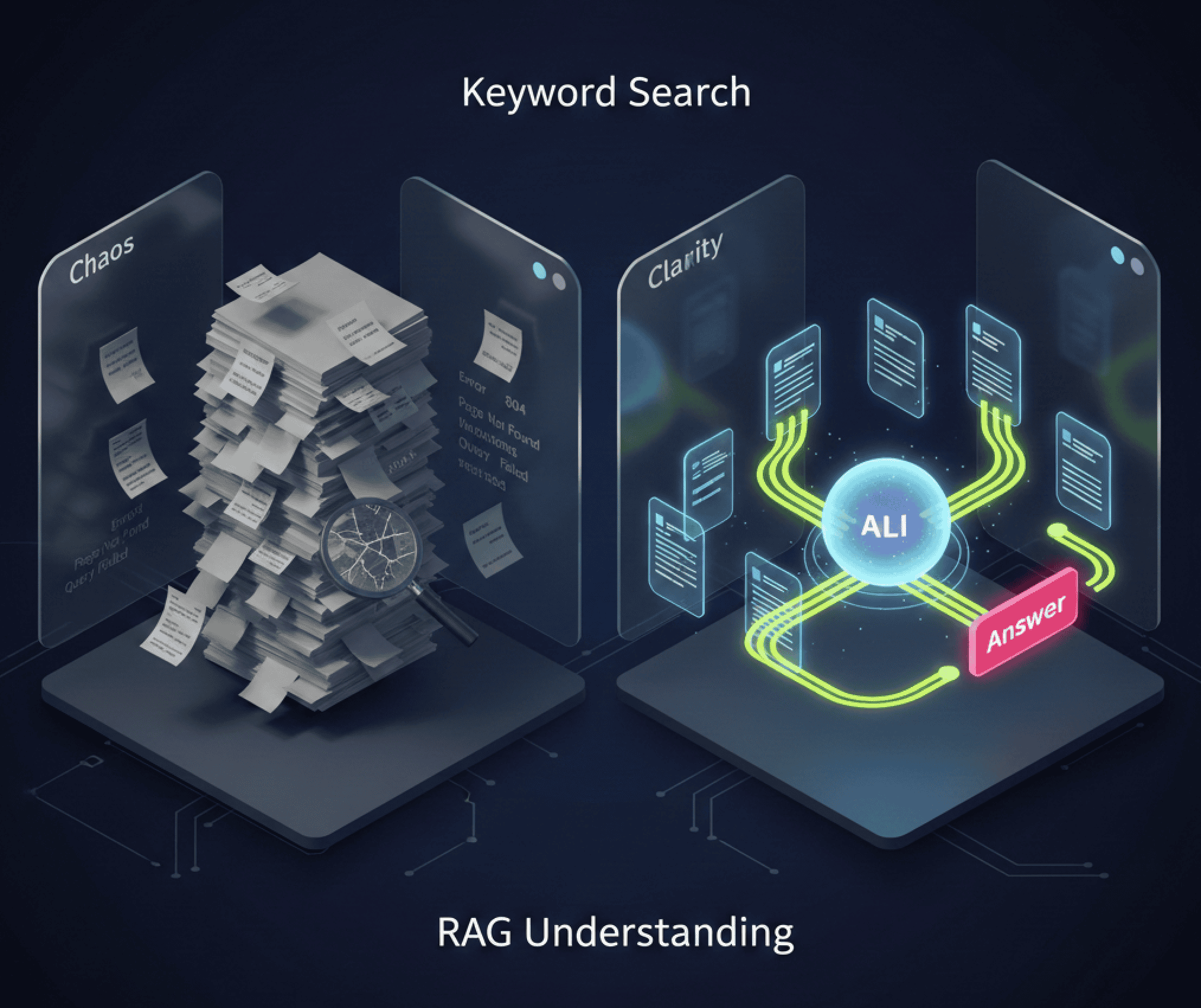

Understanding the Two Approaches

Traditional Search: The Keyword Matching Era

Traditional search systems work on a simple principle:

- Index documents based on words they contain

- Match user queries to indexed keywords

- Rank results by keyword frequency and relevance signals

- Return a list of documents that might contain the answer

The Problem: Keywords don't capture meaning. "Critical temperature threshold" and "maximum operating temperature" mean the same thing but share zero keywords.

RAG: Semantic Understanding Meets Generation

RAG transforms search into intelligent question-answering:

- Embed documentation into semantic vectors that capture meaning

- Retrieve contextually relevant information based on intent

- Augment AI with precise, current documentation

- Generate direct answers with source citations

The Advantage: RAG understands that "cooling failure recovery" relates to "HVAC emergency procedures" even without shared keywords.

Head-to-Head Comparison

Scenario 1: Emergency Troubleshooting

Query: "What should I do if I see error code E-47 on the UPS display?"

| Traditional Search | RAG-Powered Search |

|---|---|

| Returns 12 documents mentioning "error code" | Instantly provides: "Error E-47 indicates battery communication fault. Immediate actions: 1) Check battery module connections, 2) Verify battery management system status, 3) If critical load, prepare for bypass mode. See UPS Manual Section 8.4 for detailed diagnostics." |

| User must open and scan each document | Direct answer with exact procedure and source |

| 5-10 minutes to find answer | Answer in 3 seconds |

| MTTR: 45+ minutes | MTTR: 15 minutes |

Scenario 2: Compliance Documentation

Query: "What are our data retention requirements for incident logs?"

| Traditional Search | RAG-Powered Search |

|---|---|

| Returns compliance policy PDFs, legal documents, and email threads | "Per company policy DC-SEC-001 (updated Oct 2025), incident logs must be retained for 7 years. Critical security incidents require 10-year retention per SOC 2 Type II requirements. Automated archival to cold storage after 2 years. See Compliance Manual Section 3.2." |

| User must read multiple documents and reconcile conflicts | Synthesizes answer from multiple sources with current policy |

| Uncertainty about which policy is current | Clear citation to authoritative source with date |

| 15-30 minutes | 10 seconds |

Scenario 3: Equipment Specifications

Query: "Can we add 10 more racks to Row 7?"

| Traditional Search | RAG-Powered Search |

|---|---|

| Returns: floor plans, electrical diagrams, rack specifications | "Row 7 currently has 18 of 24 racks populated. Available power: 45kW remaining of 288kW circuit. Cooling capacity: adequate for 6 additional standard racks (6kW each). However, PDU-7B is at 87% capacity—recommend load balancing or PDU upgrade. See Capacity Plan Q1-2026 and Electrical Design Doc." |

| User must calculate from multiple sources | Instant analysis with actionable recommendation |

| Risk of missing constraints (PDU limit) | Comprehensive answer considering all factors |

| 30-60 minutes | 5 seconds |

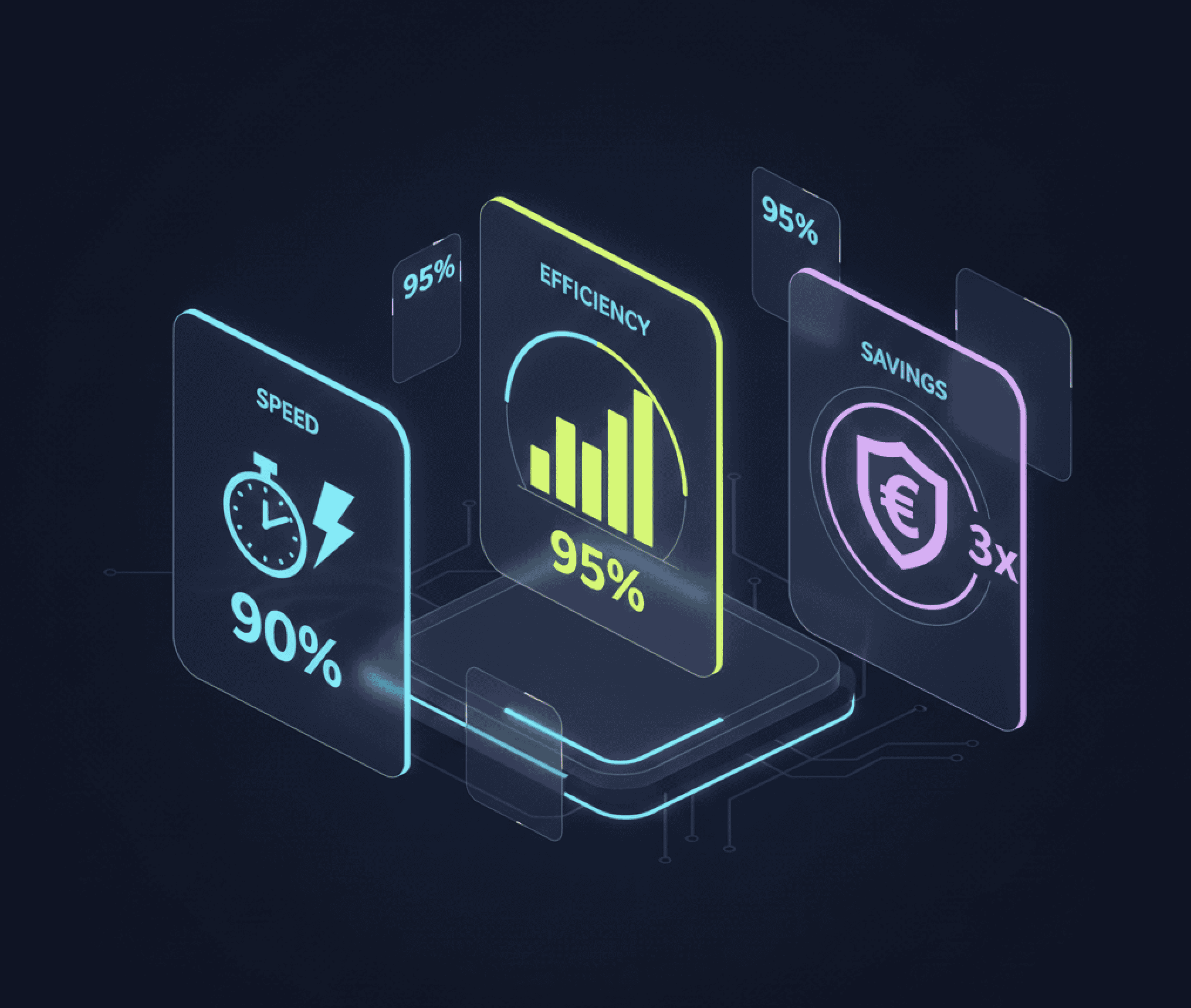

The Business Impact: By the Numbers

Operational Efficiency

| Metric | Traditional Search | RAG Implementation | Improvement |

|---|---|---|---|

| Average time to find critical info | 8-15 minutes | 30-60 seconds | 90% faster |

| Documents searched per query | 5-10 documents | N/A (direct answers) | 95% less effort |

| Search abandonment rate | 35-40% | 5-8% | 80% reduction |

| Successful first-query resolution | 25-30% | 75-85% | 3x improvement |

Financial Impact

For a 20-person data center operations team:

| Cost Factor | Traditional Search | RAG System | Annual Savings |

|---|---|---|---|

| Time spent searching (30 min/day × $50/hr) | $130K/year | $26K/year | $104K |

| Incident resolution delays | $80K/year | $20K/year | $60K |

| Training on documentation tools | $25K/year | $10K/year | $15K |

| Documentation maintenance | $40K/year | $25K/year | $15K |

| Total Annual Impact | $275K | $81K | $194K savings |

ROI Calculation: With implementation costs of $50-80K for a team this size, payback period is 4-6 months.

Real-World Scenarios: What RAG Implementation Looks Like

Scenario: Large-Scale Colocation Environment (200+ Facilities)

Typical Challenges Without RAG:

- 50,000+ documentation searches per week across operations teams

- Average search time: 10-15 minutes per query

- 30-40% of searches abandoned without finding useful answers

- Engineers often maintain personal "cheat sheet" documents

Expected Outcomes With RAG:

- Average time to answer: under 1 minute

- Search abandonment drops to under 10%

- Engineers typically report 2-3 hours/week in time savings

Projected Impact: Multi-million dollar annual productivity gains, 20-30% faster incident resolution

Scenario: Enterprise Data Center with Legacy Documentation

Common Problem: Decades of documentation across multiple systems, acquisitions, and vendors

Typical Traditional Search Issues:

- Conflicting information between old and new procedures

- No way to determine which document is authoritative

- Critical safety information buried in lengthy PDFs

How RAG Addresses This:

- Ingests all documentation with metadata (date, authority level, equipment version)

- Automatically weights recent, authoritative sources higher

- Surfaces conflicts when they exist rather than hiding them

Expected Benefits: Reduced risk of incidents due to outdated procedures, significantly faster onboarding for new engineers

Technical Comparison: Why RAG Wins

Limitation 1: Synonyms and Terminology

Traditional Search: "Power failure" won't find "utility loss event" or "mains outage"

RAG: Understands semantic similarity—finds all related concepts regardless of terminology

Limitation 2: Multi-Step Reasoning

Traditional Search: Can't combine information from multiple sources

RAG: Synthesizes answers from equipment manuals + facility procedures + historical incident data

Limitation 3: Context Awareness

Traditional Search: Doesn't understand that "replace battery" means something different for UPS vs smoke detectors

RAG: Uses query context to provide equipment-specific answers

Limitation 4: Implicit Questions

Query: "What changed in the cooling protocol?"

Traditional Search: Needs explicit version numbers or dates

RAG: Infers you want recent changes, compares current vs previous procedures

Limitation 5: Negative Queries

Query: "What should I NOT do during a generator test?"

Traditional Search: Keyword "not" often ignored, returns what TO do

RAG: Understands negation, provides safety warnings and prohibited actions

Migration Strategy: From Search to RAG

Phase 1: Augmentation (Months 1-2)

- Deploy RAG alongside existing search

- A/B test with 20% of users

- Collect feedback and usage metrics

- Refine embeddings and retrieval

Benefit: Zero disruption, prove value before full rollout

Phase 2: Integration (Months 3-4)

- Make RAG the default search experience

- Keep traditional search as fallback

- Train power users on advanced queries

- Integrate with ticketing and monitoring systems

Benefit: Smooth transition with safety net

Phase 3: Optimization (Months 5-6)

- Remove traditional search

- Implement conversational follow-ups

- Add proactive suggestions

- Connect to real-time system data

Benefit: Full RAG capabilities unlocked

Common Objections Addressed

"Our documentation is too complex for AI"

Reality: RAG handles complexity better than keyword search. The more complex your documentation, the greater the advantage of semantic understanding.

"Traditional search is 'good enough'"

Cost Analysis: If engineers spend 30 minutes/day searching, that's 6% of productive time. For a 100-person team at $75/hr: $1M+/year in lost productivity.

"Implementation is too expensive"

ROI Data: Average implementation cost: $300-500K Average annual savings: $1.5-3M Payback period: 2-4 months

"What about search result ranking and filters?"

RAG Advantage: Instead of ranking documents by keyword relevance, RAG ranks by semantic relevance to your actual need. Advanced RAG systems still support filters (by date, equipment type, etc.) but apply them to more intelligent results.

The Future: Beyond Search to Predictive Assistance

RAG is evolving beyond reactive search to proactive support:

Contextual Awareness

Traditional: You search when you need something RAG Future: System suggests relevant info based on what you're doing

Predictive Documentation

Traditional: Static documents wait to be found RAG Future: System predicts your next question based on task context

Learning Systems

Traditional: Search doesn't improve over time RAG Future: Learns from successful queries, continuously improves

Implementation Checklist

- Audit current search usage (time spent, abandonment rate, common queries)

- Calculate baseline costs (productivity loss, delayed resolutions)

- Inventory documentation sources (wikis, PDFs, vendor docs, tickets)

- Identify pilot use cases (start with highest-pain areas)

- Select RAG platform (build vs buy vs customize)

- Establish success metrics (time to answer, user satisfaction, ROI)

- Plan migration timeline (3-6 months typical)

- Train power users (maximize adoption and feedback)

- Measure and optimize (track metrics monthly, refine continuously)

Conclusion: The Search Paradigm Shift

Traditional search was revolutionary in 1998. In 2026, it's a bottleneck.

The numbers are clear:

- 90% faster time to answers

- 3x better first-query resolution

- $2-3M annual savings for medium-sized operations

- 2-3 month payback period

For data center operations where seconds matter and accuracy is critical, RAG isn't just better than traditional search—it's a completely different category of capability.

The question isn't whether to adopt RAG, but how quickly you can implement it before your competition does.

Next Steps

Evaluate RAG for Your Data Center:

- Measure current search productivity costs

- Calculate potential ROI

- Request a RAG demonstration with your documentation

- Start pilot in highest-impact area

Want to see RAG in action with your data center documentation? Schedule a demo to experience the difference between finding documents and getting answers.