RAG for Marketing & Sales: The Complete Guide to AI-Powered Knowledge Management

Learn how RAG transforms revenue team knowledge management. Covers contradiction detection, content maintenance, and real-time intelligence for sales and marketing ops.

You know the scenario. A rep is on a call, prospect asks about enterprise pricing, and suddenly they're digging through three different folders, two Slack threads, and a six-month-old deck that may or may not reflect current numbers. Meanwhile, the prospect waits.

Or this one: Marketing launches a campaign claiming your product does real-time analytics. Sales has been telling prospects it's batch processing. Product documentation says both, depending on which page you read. Nobody catches the contradiction until a prospect does—in the middle of a $500K deal.

Revenue teams don't have a content creation problem. They have a content chaos problem. And traditional tools—SharePoint, Confluence, even purpose-built enablement platforms—were designed to store content, not to ensure it's accurate, current, or consistent.

That's where RAG changes the equation.

What RAG Actually Means for Revenue Teams

RAG stands for Retrieval-Augmented Generation. Strip away the technical jargon, and here's what it does: when someone asks a question, the system first retrieves relevant content from your actual documents, then generates a response grounded in that content—with citations showing exactly where the answer came from.

This matters for revenue teams because it solves the fundamental trust problem with AI: hallucination. Generic AI tools like ChatGPT will confidently make up pricing, invent features, and fabricate case studies. RAG can't do that—every answer is traceable to your source documents.

But retrieval is just the starting point. The real value for marketing and sales comes from what you can build on top of it:

- Instant answers during live deals: "Do we have a case study for fintech companies over 1,000 employees?" gets an actual answer, not a folder to search through

- Contradiction detection: Surface mismatches between what marketing claims, what sales promises, and what product actually does

- Content maintenance: Flag outdated battlecards, stale playbooks, and documents that haven't been reviewed in months

- Knowledge capture: Turn tribal knowledge into searchable institutional memory before your top performers leave

The question isn't whether AI will transform how revenue teams manage knowledge. It's whether you'll adopt systems that make your content more trustworthy—or less.

The Solution Landscape: Understanding Your Options

If you're evaluating AI-powered knowledge management for your revenue team, you're looking at several distinct categories. Each has trade-offs worth understanding before you commit.

Traditional Sales Enablement Platforms

Examples: Highspot, Seismic, Showpad

These platforms excel at content organization, analytics, and sales-specific workflows. They'll tell you which decks get used, track content engagement, and integrate with your CRM. What they typically lack: semantic search that understands meaning, contradiction detection across documents, and automated content maintenance.

Best for: Teams that need robust analytics and sales-specific features, and have dedicated enablement staff to maintain content manually.

Enterprise Search Tools

Examples: Glean, Guru, Notion AI

These tools index content across multiple systems and provide search interfaces. Better than folder diving, but most still rely on keyword matching rather than semantic understanding. They find documents—they don't verify whether those documents are accurate or consistent with each other.

Best for: Organizations that need unified search across many systems and can tolerate occasional retrieval of outdated content.

Generic AI Assistants

Examples: ChatGPT, Claude, Gemini (without RAG integration)

Powerful for general tasks, but dangerous for revenue operations. They have no access to your internal content and will confidently fabricate answers. "What's our enterprise pricing?" will get you a made-up number delivered with complete confidence.

Best for: General productivity tasks that don't require internal knowledge.

RAG-Native Platforms

Examples: MojarAI, and emerging players in the space

Purpose-built for grounding AI responses in your actual documents. Key differentiators: source attribution on every answer, semantic search that understands context, and increasingly, automated content maintenance and contradiction detection.

Best for: Teams that need trustworthy AI answers backed by their actual content, especially those with large document volumes or compliance requirements.

Build-Your-Own RAG

Some organizations attempt to build custom RAG systems using vector databases and LLM APIs. This can work for technically sophisticated teams with specific requirements, but underestimates the complexity of document parsing, chunking strategies, and ongoing maintenance.

Best for: Organizations with dedicated AI engineering resources and highly specialized requirements.

How to Evaluate RAG Solutions: A Framework for Revenue Teams

Not all RAG implementations are equal. Here's what separates systems that actually help from those that create new problems.

1. Source Attribution and Traceability

The question: When the system gives an answer, can you see exactly which documents it came from?

This isn't optional. If a rep quotes pricing to a prospect based on AI output, and that pricing is wrong, you need to know whether the AI hallucinated or retrieved outdated content. Source attribution is your audit trail.

What to look for:

- Direct links to source documents for every answer

- Confidence indicators when multiple sources conflict

- The ability to drill down into the specific passages used

2. Semantic Understanding vs. Keyword Matching

The question: Does the system understand what you're asking, or just match words?

A rep asking "What's our response to the security objection?" needs the system to understand that "security objection" might be discussed under "compliance concerns," "data protection," or "SOC 2 requirements" in different documents.

What to look for:

- Natural language queries that return relevant results even without exact keyword matches

- Understanding of synonyms, related concepts, and contextual meaning

- Ability to surface relevant content from unexpected sources

3. Contradiction Detection

The question: Can the system identify when your documents disagree with each other?

This is the capability most solutions lack—and the one that prevents the most embarrassing failures. According to Gartner's 2024 survey, 90% of marketing and sales executives report conflicting functional priorities between departments—and inconsistent messaging erodes buyer confidence. Your RAG system should catch it before prospects do.

What to look for:

- Alerts when retrieved documents contain conflicting information

- Cross-document analysis that flags inconsistencies proactively

- The ability to surface "Marketing claims X, but Product docs say Y" conflicts

4. Content Freshness and Maintenance

The question: How does the system handle outdated content?

According to Datategy's research on content effectiveness, content accuracy affects up to 40% of content effectiveness—and content decay is one of the primary reasons enablement programs fail. Reps stop trusting playbooks because they've been burned by outdated information.

What to look for:

- Flags for content that hasn't been reviewed in X months

- Detection of references to outdated processes, deprecated features, or former employees

- Automated alerts when new content contradicts existing documents

5. Integration Depth

The question: Does the system work where your team already works?

The best knowledge system is useless if reps have to leave their CRM, email, or call platform to access it. Integration isn't just about syncing data—it's about surfacing answers in context.

What to look for:

- Native integrations with your CRM (Salesforce, HubSpot)

- Browser extensions or embedded widgets for real-time access

- API access for custom workflows and automation

6. Access Controls and Compliance

The question: Can you control who sees what?

Enterprise revenue teams have legitimate reasons to restrict content access—regional pricing variations, confidential competitive intel, pre-announcement product information. Your RAG system needs to respect these boundaries.

What to look for:

- Role-based access controls that mirror your existing permissions

- Audit trails for compliance and security reviews

- Data residency options for regulated industries

The Hidden Problem: Knowledge Base Maintenance

Here's what most RAG discussions miss: retrieval is only as good as what you're retrieving from.

Organizations invest heavily in creating sales playbooks, competitive battlecards, and marketing assets. They rarely invest in maintaining them. The result: knowledge bases that decay faster than they're updated.

According to research from Lystloc on field sales challenges, lack of sync between sales and marketing leads to inconsistent messaging and poor lead handoffs—and content maintenance is consistently cited as a top reason enablement programs fail. Reps learn not to trust the wiki because they've been burned by outdated information. So they ask Slack instead—or worse, they guess.

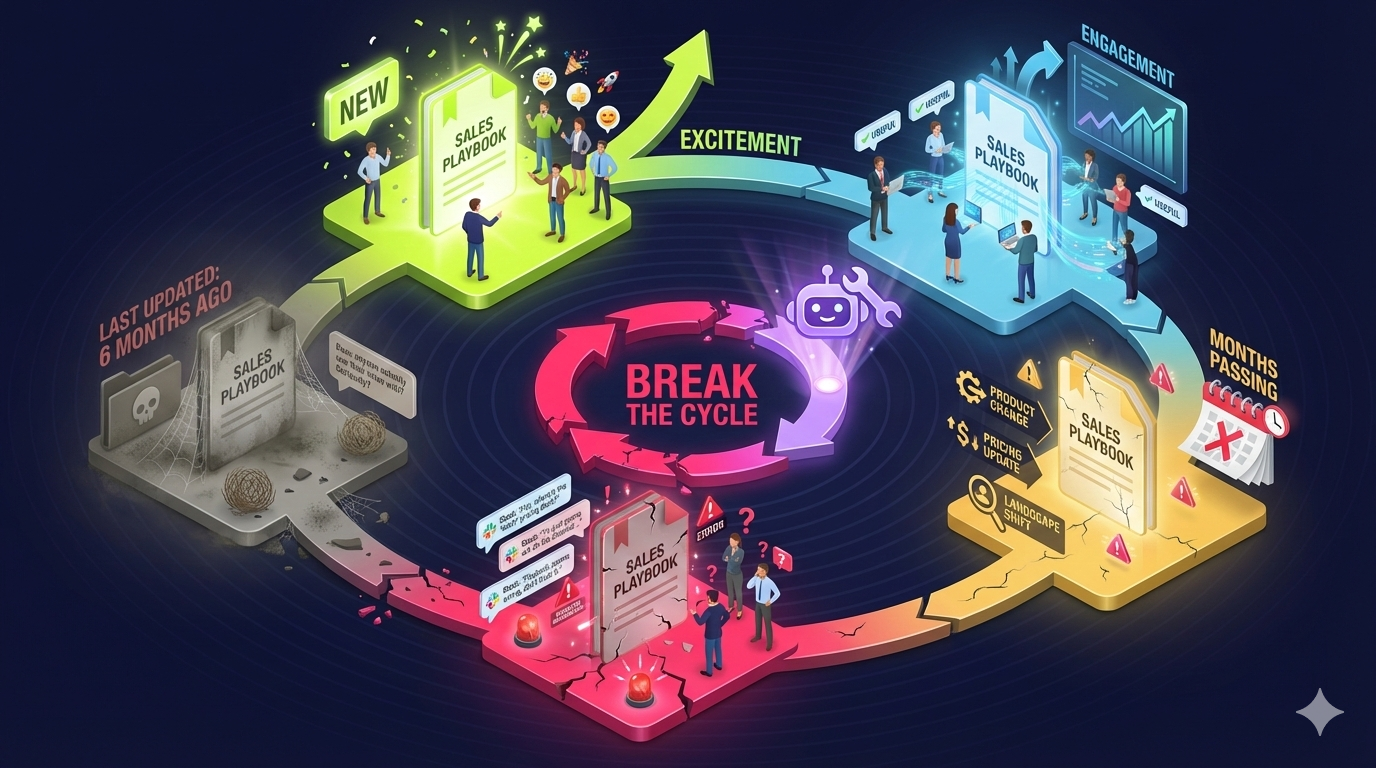

The Content Decay Cycle

- Launch: New playbook ships with great fanfare

- Adoption: Reps use it for a few weeks

- Drift: Product changes, pricing updates, competitive landscape shifts

- Distrust: Reps find outdated information, lose confidence

- Abandonment: "Does anyone actually use their sales wiki?" becomes a running joke

Traditional enablement platforms have no mechanism to break this cycle. They can tell you which content is accessed, but not which content is accurate.

AI-Powered Maintenance: The Missing Layer

This is where next-generation RAG platforms diverge from basic retrieval systems. Advanced implementations include maintenance agents that:

- Detect inconsistencies: Flag when a sales deck contradicts product documentation

- Identify staleness: Surface content that references deprecated features, old pricing, or former employees

- Suggest updates: When new content is added, identify older documents that may need revision

- Learn from feedback: When users report bad answers, automatically investigate source documents

The vision is a self-improving knowledge base—one where bad experiences trigger automatic quality improvements, not just frustrated Slack messages.

Real-World Impact: What the Numbers Show

Let's ground this in specifics. Here's what research tells us about the problems RAG can address:

The Time Drain

- Sales teams spend 20-30% of their time on RFP responses (Stack AI), hunting through documents and customizing answers manually

- Reps waste significant time on non-selling activities like data entry, research, and record-keeping (Lystloc)

- Marketing teams report that content creation takes too long, often hours or days per piece (Stensul)

The Trust Problem

- 30-40% of CRM data is bad (Salesmate), leading to poor lead qualification and wasted effort

- 60% of sales forecasts are off by more than 20% (Kairntech), often due to fragmented data and manual processes

- Content accuracy affects up to 40% of content effectiveness (Datategy)

The Alignment Gap

- Lack of sync between sales and marketing leads to inconsistent messaging and poor lead handoffs (Lystloc)

- Different teams using different data sources creates conflicting narratives (Gartner)

- 74% of sales professionals are concerned about economic uncertainty affecting deal cycles (HubSpot), making accurate information even more critical

The Opportunity

RAG implementations that address these pain points report:

- 60-80% reduction in RFP response time when answers can be automatically pulled from existing documentation

- Significant improvement in new rep ramp time when institutional knowledge is searchable

- Reduced deal-killing inconsistencies when contradiction detection catches conflicts before prospects do

Implementation Considerations: What Actually Works

If you're evaluating RAG for your revenue team, here's what separates successful implementations from expensive disappointments.

Start with a Specific Use Case

"Better knowledge management" is too vague. Successful implementations target specific, measurable problems:

- RFP acceleration: Can we cut response time by 50% while maintaining quality?

- New rep onboarding: Can we reduce ramp time by giving new hires instant access to institutional knowledge?

- Competitive intelligence: Can we ensure battlecards are always current and consistent?

- Content consistency: Can we catch contradictions before they reach prospects?

Pick one. Prove value. Expand from there.

Content Quality Matters More Than AI Quality

The most sophisticated RAG system in the world can't help if your source documents are a mess. Before implementation:

- Audit your content: What exists? What's current? What's contradictory?

- Establish ownership: Who's responsible for maintaining each content type?

- Define freshness standards: How often should battlecards be reviewed? Pricing sheets? Product documentation?

Many organizations discover that the RAG implementation process forces a long-overdue content cleanup. That's a feature, not a bug.

Plan for Ongoing Maintenance

RAG isn't a one-time implementation. Your content changes, your products evolve, your competitive landscape shifts. Budget for:

- Regular content audits: Quarterly at minimum

- Feedback loops: How do users report problems? How are those problems addressed?

- System tuning: Retrieval quality can be improved over time with better chunking, metadata, and embedding strategies

Measure What Matters

Vanity metrics ("We have 10,000 documents indexed!") tell you nothing about value. Track:

- Answer accuracy: Are the responses correct? How often do users report problems?

- Time savings: How long did this task take before? How long now?

- Adoption: Are people actually using the system? For what?

- Trust indicators: Are reps citing the system in calls? Or still asking Slack?

Where Mojar Fits: Honest Positioning

We built Mojar because we experienced these problems firsthand. Here's where we think we add value—and where we don't.

What Mojar Does Well

Contradiction detection: Our maintenance agent actively scans for inconsistencies across your documents. When your sales deck claims "real-time processing" but your product docs say "batch mode," we surface that conflict before it reaches a prospect.

Content maintenance: We don't just retrieve content—we help you maintain it. Automated flags for stale documents, references to deprecated features, and content that hasn't been reviewed. The goal is a knowledge base you can actually trust.

Universal document support: PDFs (including scanned documents), Word, spreadsheets, presentations, markdown, HTML. Our hybrid parsing engine handles the messy reality of enterprise content.

Source attribution: Every answer shows exactly where it came from. No black boxes, no mysterious AI outputs—traceable responses backed by your actual documents.

Where We're Still Building

Deep CRM integration: We have API access, but native Salesforce/HubSpot widgets are on the roadmap, not shipped.

Real-time external data: Connecting internal knowledge with live market intelligence is a capability we're developing, not a current strength.

When Mojar Isn't the Right Choice

If you need:

- Robust sales analytics and content engagement tracking → Look at Highspot or Seismic

- A general-purpose AI assistant → ChatGPT or Claude work fine for tasks that don't require internal knowledge

We're focused on a specific problem: making your internal knowledge trustworthy, findable, and consistent. If that's your pain point, we should talk. If it's not, we'll tell you.

Getting Started: A Practical Path Forward

If you're convinced that AI-powered knowledge management is worth exploring, here's a realistic path forward:

Phase 1: Audit (2-4 weeks)

- Inventory your current content across all systems

- Identify the highest-value use cases (RFPs? Onboarding? Competitive intel?)

- Document your biggest pain points with specific examples

- Talk to your reps about what they actually need during live deals

Phase 2: Pilot (4-8 weeks)

- Start with a contained use case and limited document set

- Measure baseline metrics before implementation

- Deploy with a small group of power users

- Gather feedback aggressively—what works, what doesn't, what's missing

Phase 3: Expand (Ongoing)

- Add document sources based on pilot learnings

- Expand user access incrementally

- Establish maintenance workflows and ownership

- Build feedback loops that drive continuous improvement

Questions to Ask Any Vendor

- How do you handle source attribution? Can I see exactly which documents informed each answer?

- What happens when documents contradict each other?

- How do you identify outdated content?

- What's required to maintain the system over time?

- Can I see a demo with my actual documents, not your curated examples?

The Bottom Line

Revenue teams are drowning in content they can't trust. "Is this the latest deck?" is a question that shouldn't require three Slack messages and a folder archaeology expedition to answer.

RAG technology offers a path forward—not by creating more content, but by making existing content trustworthy, findable, and consistent. The key differentiator isn't retrieval speed or AI sophistication. It's whether the system helps you maintain content quality over time, or just surfaces whatever mess already exists.

The organizations that get this right will have a meaningful advantage: reps who can find accurate answers in seconds, marketing teams confident their messaging is consistent, and leadership that can trust the information flowing through their revenue engine.

The organizations that don't will keep asking Slack.

Next Steps

Explore related content:

- "Is This the Latest Deck?" Why Nobody Knows Which Version Is Correct — Deep dive into the version control problem

- How AI Can Detect Conflicting Sales Messaging Before Your Prospects Do — Mojar's core differentiator explained

- Sales Reps Spend 20-30% of Time on RFPs—Here's What That Actually Costs — The ROI case for automation

Ready to see it in action? Request a demo with your actual documents—not our curated examples.

Frequently Asked Questions

RAG (Retrieval-Augmented Generation) is an AI architecture that grounds responses in your actual documents—sales decks, playbooks, product specs, and marketing assets. Unlike generic AI that hallucinates, RAG retrieves relevant content from your knowledge base before generating answers, ensuring accuracy and source attribution.

Traditional platforms store and organize content but can't verify accuracy or detect conflicts. RAG systems actively query your documents in real-time, surface contradictions between sources, and provide answers with citations. The key difference: RAG understands content meaning, not just file locations.

Yes—this is a core RAG capability often overlooked. Advanced RAG systems can identify when your sales deck claims 'real-time processing' while product docs say 'batch mode,' or when marketing promises features that product no longer supports. This contradiction detection prevents brand-damaging inconsistencies.

Sales teams spend 20-30% of their time on RFP responses, often hunting through scattered documents. RAG can reduce response time by 60-80% by automatically pulling relevant answers from product docs, past proposals, pricing sheets, and compliance documentation—with source citations included.

ChatGPT has no access to your internal documents and frequently hallucinates. RAG grounds every response in your actual content—playbooks, pricing, product specs—with traceable sources. When a rep asks 'What's our enterprise pricing?', RAG returns your real pricing, not a made-up answer.

Basic RAG retrieves whatever exists—including stale content. Advanced RAG platforms include maintenance agents that flag outdated information, detect documents that haven't been reviewed, and identify content that contradicts newer sources. This 'knowledge base hygiene' is what separates useful systems from liability risks.

RAG scales well for enterprise deployments with thousands of documents across multiple teams. Key requirements: robust access controls, audit trails, integration with existing systems (CRM, DAM, SharePoint), and the ability to handle multi-region content variations. Most enterprise challenges are organizational, not technical.